RICE Scoring

What is RICE Scoring?

RICE Scoring is a prioritization framework that helps product managers to take into account various factors, such as a potential feature’s impact, the effort required to implement it, the time frame, and the personal biases of those involved in decision-making.

Establishing a RICE score provides you with a structure that enables better-informed decisions, focusing on the features that will deliver the most significant value to your users and achieve your company’s goals.

The RICE framework was developed by the product team at the communications software company Intercom. They found that the existing prioritization models didn’t help them to determine which of their particular set of competing project ideas they should work on first.

How does the RICE Scoring Model work?

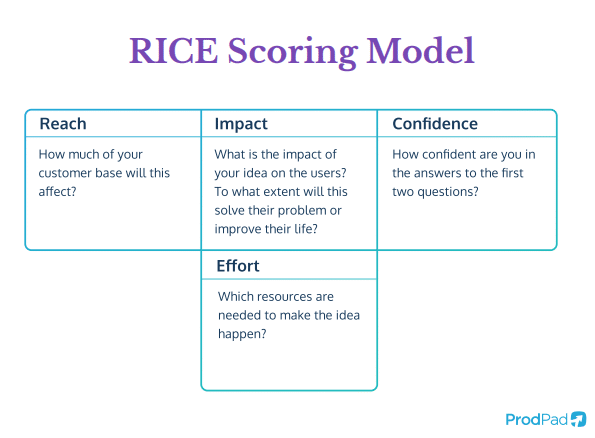

The RICE Scoring Model is a popular prioritization methodology used by many product teams. It relies on four main components to help quantify the potential impact of a project or initiative.

1. Reach – This factor estimates the number of people that the feature or idea will affect.

2. Impact – This factor estimates the degree to which the project will affect the users or customers.

3. Confidence – This factor aims to estimate how sure the team is about the potential impact.

4. Effort – This factor aims to estimate the amount of work required to complete the project. You can calculate Effort in terms of time, team members, or other resources needed to complete the project.

Reach, Impact, and Confidence measure the benefits that will be gained from implementing the idea. The Effort, meanwhile, measures the cost of implementation.

How to work out a RICE Score

When it comes to scoring each of the elements in the equation, consistency is key. As long as your entire team is consistently using the same scoring system, it doesn’t matter whether you score using percentages or a scale from 1 to 10. You can decide on whatever scoring scale works best for you.

Our preference is to assign a score from 1 to 10 for each of the four factors. Then, you can use the following formula to calculate the overall RICE score:

You then prioritize the product, feature, or idea with the highest total scores first.

The higher the RICE score, the more impactful and valuable the idea is likely to be.

Understanding the components of RICE Scoring

Understanding each of the components that make up the framework is key to successfully using RICE Scoring to make informed prioritizing decisions.

R – Reach

Reach in the RICE Model is an estimate of the potential number of users who will be impacted by a project or feature within a set time period.

You can calculate Reach in different ways. You could measure by the number of customers per month, daily transactions, or how many users engage with a specific feature. It depends on various factors, including the type of the project, the stage of the user journey, and the percentage of users who are likely to be affected.

Reach is also constrained by a specific time frame. You could choose to measure over a day, week, month, or whatever time period you think best suits your product.

R – Reach

For instance, consider a social media platform that is developing a new video streaming feature. The Reach in this case could be the number of users who are likely to use and engage with the video streaming quality within a week.

I – Impact

Impact in the RICE Model is an estimate of the potential impact a project or feature would have on users and your business.

This score is crucial as it helps in prioritizing product features based on their expected outcomes. However, it can be difficult to establish accurately.

You should consider a range of factors when calculating the Impact score, such as:

- user satisfaction

- revenue growth

- cost saving

These components can have a different degree of impact on users and the business. Their relative importance will depend on your product team’s goals.

A key consideration when assigning an Impact score is the magnitude and duration of the expected impact. In other words, a feature that has a larger impact but a shorter duration might not be as valuable as one that will do less but for much longer.

As mentioned, Impact can be challenging to determine accurately. To ensure consistency, it is a good idea to define a set scale of measurement for the impact of the features you’re evaluating. You should base the scale on a set of predefined quantitative metrics that you can use to assign scores, such as trial sign-ups.

While you can also measure Impact using qualitative metrics, this can be very hard to accurately predict. They can also be difficult to measure once the idea has been implemented.

C – Confidence

Confidence in the RICE framework reflects the product team’s level of certainty regarding the potential impact of a feature.

You’ll need to consider a combination of quantitative data and qualitative input. You should also try to avoid personal biases as much as possible.

Quantitative data such as user engagement, conversion rate, or revenue growth can provide valuable insights into the potential impact of a feature. Qualitative factors like market research, user feedback, and competitor analysis can also provide insights that might not be reflected in the data. You should evaluate both types of information together to more accurately calculate the Confidence score.

It is essential to avoid personal biases such as favoritism, overconfidence, or negativity. They can distort the RICE score and lead to inaccurate decision-making. You should try to focus on only relevant data, and feedback from users and stakeholders. This can help to minimize the influence of personal biases.

A simple scale can measure the Confidence score, ranging from 1 to 3, representing low, medium, and high Confidence levels respectively. It can also be presented as a ten-point scale for more granularity, or even as a percentage scale (though this can end up being a time sink if given too much focus).

A high Confidence level indicates that your team is highly confident in the potential impact of the feature. In contrast, a low Confidence level suggests that there is either a higher degree of uncertainty or insufficient information to make an accurate assessment.

To enhance the accuracy of your Confidence score, and to make better-informed decisions., you might consider:

- prioritizing data collection and analysis

- gathering feedback from users and stakeholders

- conducting market research

When a feature has a low Confidence score, you should consider it as a last resort, or as requiring more research and testing before implementation.

E – Effort

Effort in the RICE scoring model represents the time and resources required to implement the chosen feature or product.

You can measure Effort in various ways, though it is generally presented in terms of “person-months”. You can also rank it by the number of employees required and the number of hours they need to work each week. It can also be worthwhile to consider the financial costs of implementation.

Communication is key to ensuring that all parties understand the scope and complexity of the feature and what is required to implement it. You will need to work closely with engineering and design teams to ensure that they are scoring in a standardized and accurate way.

By taking these factors into account and using a consistent scoring method, product managers can arrive at a rough estimate of the Effort score.

It is important to note that the Effort score, while crucial to consider when determining the RICE score, should not be the sole factor in prioritization. You should balance it against the other components of the model to help ensure you are making informed and data-driven product decisions.

What are the benefits of using RICE Scoring?

Using RICE Scoring has several key advantages when prioritizing product features. Product managers can assign a score to each feature based on its potential impact, time frame, and effort required.

It takes into account both quantitative and qualitative factors and provides a single score that enables teams to compare and prioritize features effectively.

Some more specific benefits of RICE Scoring include:

- It uses a quantitative metric that helps to prioritize projects or tasks based on their potential impact on your users and business.

- It provides a data-driven approach to prioritization, enabling teams to make better-informed decisions.

- It can be aligned with an organization’s goals, making it a useful strategic planning tool for product teams.

- It accounts for potential biases in the analysis, leading to a more objective and informed decision-making process.

By using data-driven decisions, and prioritization frameworks like the RICE Model, product teams can prioritize product features objectively, leading to better outcomes and more successful products.

What are the downsides of using RICE Scoring?

While RICE Scoring can be a helpful tool for prioritizing projects and tasks, it is essential to recognize its limitations and consider other approaches depending on the specific needs of the team or organization.

Some of the potential disadvantages of RICE Scoring include:

- It can be time-consuming to assign numerical values to the four criteria, especially when dealing with multiple features or projects.

- It relies heavily on subjectivity and qualitative factors. It attempts to account for biases, but still involves subjective judgment calls and often focuses on qualitative factors.

- Its assessment metrics can be affected by other factors, making it hard to determine if the change you have made was the sole reason for a user’s actions. It can be even harder to make accurate predictions of the potential impact if you are using qualitative metrics.

- It can lead to over-prioritization of short-term gains if the metrics aren’t given the correct weighting

You can mitigate many of the issues by applying a pre-agreed set of criteria and using a consistent scale across all of your teams. This will help to ensure everyone is measuring in the same way, and so are able to prioritize using the same metrics.